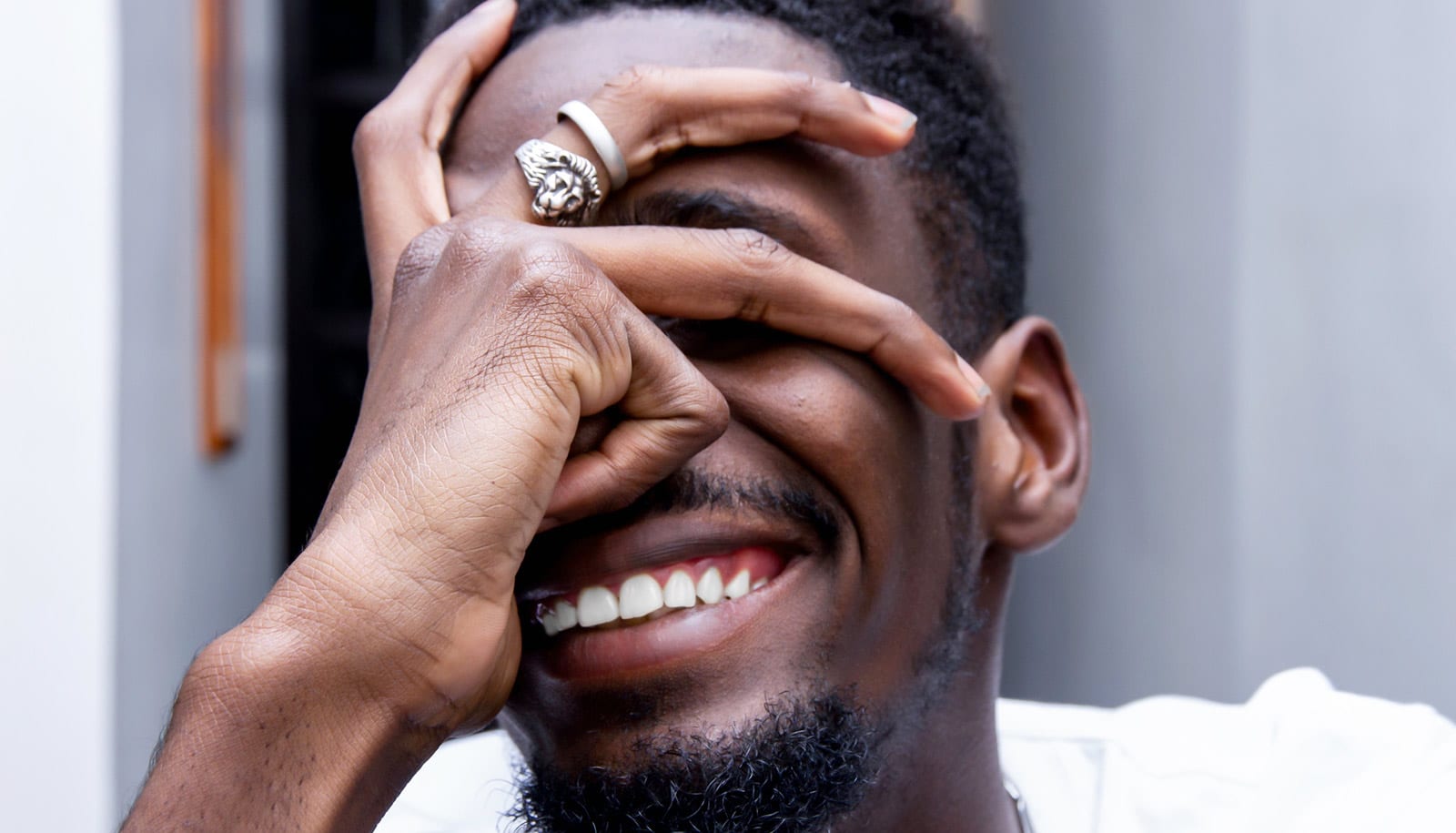

Smiles that lift the cheeks and crinkle the eyes aren’t necessarily a window to a person’s emotions, research finds.

In fact, these “smiling eye” smiles, called Duchenne smiles, seem to be related to smile intensity, rather than acting as an indicator of whether a person is happy or not, says Jeffrey Girard, a former postdoctoral researcher at Carnegie Mellon University’s Language Technologies Institute.

“I do think it’s possible that we might be able detect how strongly somebody feels positive emotions based on their smile,” says Girard, who joined the psychology faculty at the University of Kansas this past fall. “But it’s going to be a bit more complicated than just asking, ‘Did their eyes move?'”

Whether it’s possible to gauge a person’s emotions based on their behavior is a topic of some debate within the disciplines of psychology and computer science, particularly as researchers develop automated systems for monitoring facial movements, gestures, voice inflections, and word choice.

Duchenne smiles might not be as well-known as Mona Lisa smiles or Bette Davis eyes, but there is a camp within psychology that believes they are a useful rule of thumb for gauging happiness. But another camp is skeptical. Girard, who studies facial behavior and worked with Carnegie Mellon’s Louis-Phillippe Morency to develop a multimodal approach for monitoring behavior, says that some research seems to support the Duchenne smile hypothesis, while other studies demonstrate how it fails.

So Girard and Morency, along with Jeffrey Cohn of the University of Pittsburgh and Lijun Yin of Binghamton University, set out to better understand the phenomenon. They enlisted 136 volunteers who agreed to have their facial expressions recorded as they completed lab tasks designed to make them feel amusement, embarrassment, fear, or physical pain. After each task, the volunteers rated how strongly they felt various emotions.

Finally, the team made videos of the smiles occurring during these tasks and showed them to new participants (i.e., judges), who tried to guess how much positive emotion the volunteers felt while smiling.

A report on their findings appears in the journal Affective Science.

Unlike most previous studies of Duchenne smiles, this work sought spontaneous expressions, rather than posed smiles, and the researchers recorded videos of the facial expressions from beginning to end rather than taking still photos. They also took painstaking measurements of smile intensity and other facial behaviors.

Although Duchenne smiles made up 90% of those that occurred when positive emotion was reported, they also made up 80% of the smiles that occurred when no positive emotion was reported. Concluding that a Duchenne smile must mean positive emotion would thus often be a mistake. On the other hand, the human judges found smiling eyes compelling and tended to guess that volunteers showing Duchenne smiles felt more positive emotion.

“It is really important to look at how people actually move their faces in addition to how people rate images and videos of faces, because sometimes our intuitions are wrong,” Girard says.

“These results emphasize the need to model the subtleties of human emotions and facial expressions,” says Morency, associate professor in the LTI and director of the MultiComp Lab. “We need to go beyond prototypical expression and take into account the context in which the expression happened.”

It’s possible, for instance, for someone to display the same behavior at a wedding as at a funeral, yet the person’s emotions would be very different.

Automated methods for monitoring facial expression make it possible to examine behavior in much finer detail. Just two facial muscles are involved in Duchenne smiles, but new systems make it possible to look at 30 different muscle movements simultaneously.

Multimodal systems such as the ones being developed in Morency’s lab hold the promise of giving physicians a new tool for assessing mental disorders, and for monitoring and quantifying the results of psychological therapy over time.

“Could we ever have an algorithm or a computer that is as good as humans at gauging emotions? I think so,” Girard says. “I don’t think people have any extrasensory stuff that a computer couldn’t be given somewhere down the road. We’re just not there yet. It’s also important to remember that humans aren’t always so good at this either!”

The National Science Foundation and the National Institutes of Health supported this research.

Source: Carnegie Mellon University