Researchers have discovered a solid-state material mimics the neural signals responsible for transmitting information in the human brain.

The work is a step toward developing circuitry that functions like the human brain—neuromorphic computing.

The researchers discovered a neuron-like electrical switching mechanism in the solid-state material β’-CuxV2O5—specifically, how it reversibly morphs between conducting and insulating behavior on command.

The team was able to clarify the underlying mechanism driving this behavior by taking a new look at β’-CuxV2O5, a remarkable chameleon-like material that changes with temperature or an applied electrical stimulus.

In the process, they zeroed in on how copper ions move around inside the material and how this subtle dance in turn sloshes electrons around to transform it. Their research reveals that the movement of copper ions is the linchpin of an electrical conductivity change which can be leveraged to create electrical spikes in the same way that neurons function in the cerebral nervous system.

Their resulting paper appears in the journal Matter.

Why copy the brain?

In their quest to develop new modes of energy-efficient computing, the broad-based group of collaborators is capitalizing on materials with tunable electronic instabilities to achieve what’s known as neuromorphic computing, or computing designed to replicate the brain’s unique capabilities and unmatched efficiencies.

“Nature has given us materials with the appropriate types of behavior to mimic the information processing that occurs in a brain, but the ones characterized to date have had various limitations,” says co-leader of the study R. Stanley Williams, electrical and computer engineer at Texas A&M University.

“The importance of this work is to show that chemists can rationally design and create electrically active materials with significantly improved neuromorphic properties. As we understand more, our materials will improve significantly, thus providing a new path to the continual technological advancement of our computing abilities.”

Silicon chips at their max

While smart phones and laptops seemingly get sleeker and faster with each iteration, co-first author and chemistry graduate student Abhishek Parija (now at Intel Corporation), notes that new materials and computing paradigms freed from conventional restrictions are required to meet continuing speed and energy-efficiency demands. Those demands are straining the capabilities of silicon computer chips, which are reaching their fundamental limits in terms of energy efficiency. Neuromorphic computing is one such approach, and manipulation of switching behavior in new materials is one way to achieve it.

“The central premise—and by extension the central promise—of neuromorphic computing is that we still have not found a way to perform computations in a way that is as efficient as the way that neurons and synapses function in the human brain,” says co-first author and chemistry graduate student Justin Andrews. “Most materials are insulating (not conductive), metallic (conductive), or somewhere in the middle. Some materials, however, can transform between the two states: insulating (off) and conductive (on) almost on command.”

Copper ions are the key

By using an extensive combination of computational and experimental techniques, co-first author and chemistry graduate student Joseph Handy says the team was able to demonstrate not only that this material undergoes a transition driven by changes in temperature, voltage, and electric field strength that can be used to create neuron-like circuitry, but also comprehensively explain how this transition happens. Unlike other materials that have a metal-insulator transition (MIT), this material relies on the movement of copper ions within a rigid lattice of vanadium and oxygen.

“We essentially show that a very small movement of copper ions within the structure brings about a massive change in conductance in the whole material,” Handy adds. “Because of this movement of copper ions, the material transforms from insulating to conducting in response to external changes in temperature, applied voltage or applied current. In other words, applying a small electrical pulse allows us to transform the material and save information inside it as it works in a circuit, much like how neurons function in the brain.”

Andrews likens the relationship between the copper-ion movement and electrons on the vanadium structure to a dance.

“When the copper ions move, electrons on the vanadium lattice move in concert, mirroring the movement of the copper ions,” Andrews says. “In this way, incredibly small movements of the copper ions induce large electronic changes in the vanadium lattice without any observable changes in vanadium-vanadium bonding. It’s like the vanadium atoms ‘see’ what the copper is doing and respond.”

The promise of neuromorphic computing

Transmitting, storing, and processing data currently accounts for about 10% of global energy use, but co-leader of the study and chemist Sarbajit Banerjee says extrapolations indicate the demand for computation will be many times higher than the projected global energy supply can deliver by 2040.

Exponential increases in computing capabilities therefore are required for transformative visions, including the Internet of Things, autonomous transportation, disaster-resilient infrastructure, personalized medicine, and other societal grand challenges that otherwise will be throttled by the inability of current computing technologies to handle the magnitude and complexity of human- and machine-generated data.

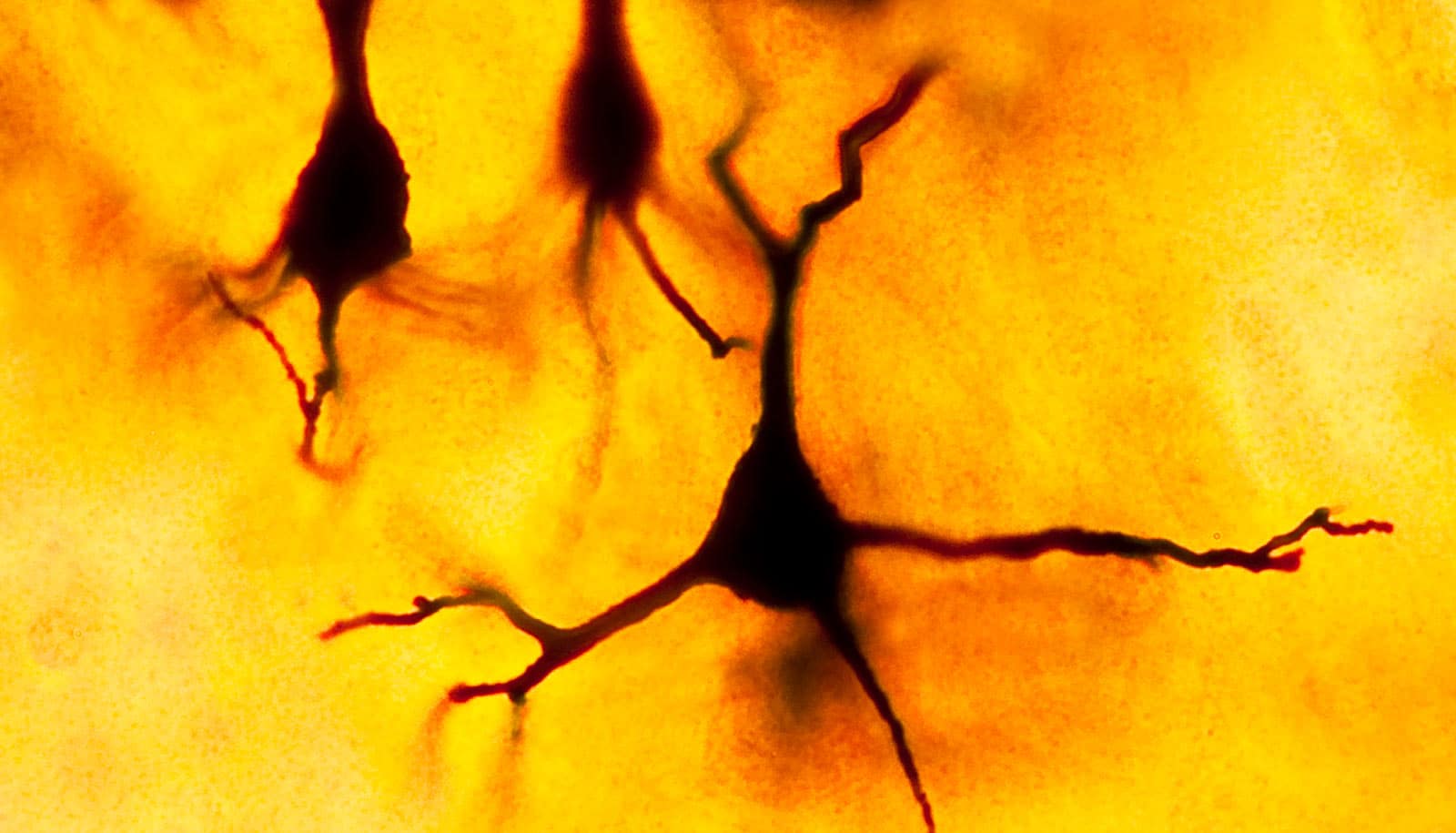

He says one way to break out of the limitations of conventional computing technology is to take a cue from nature—specifically, the neural circuitry of the human brain, which vastly surpasses conventional computer architectures in terms of energy efficiency and also offers new approaches for machine learning and advanced neural networks.

“To emulate the essential elements of neuronal function in artificial circuitry, we need solid-state materials that exhibit electronic instabilities, which, like neurons, can store information in their internal state and in the timing of electronic events,” Banerjee says.

“Our new work explores the fundamental mechanisms and electronic behavior of a material that exhibits such instabilities. By thoroughly characterizing this material, we have also provided information that will instruct the future design of neuromorphic materials, which may offer a way to change the nature of machine computation from simple arithmetic to brain-like intelligence while dramatically increasing both the throughput and energy efficiency of processors.”

Because the various components that handle logic operations, store memory and transfer data are all separate from each other in conventional computer architecture, Banerjee says they are plagued by inherent inefficiencies regarding both the time it takes for information to be processed and how physically close together device elements can be before thermal waste and electrons “accidentally” tunneling between components become major problems.

By contrast, in the human brain, logic, memory storage, and data transfer are simultaneously integrated into the timed firing of neurons that are densely interconnected in 3D fanned-out networks. As a result, the brain’s neurons process information at 10 times lower voltage and an almost 5,000 times lower synaptic operation energy in comparison to silicon computing architectures. To come close to achieving this kind of energetic and computational efficiency, he says new materials are needed that can undergo rapid internal electronic switching in circuits in a way that mimics how neurons fire in timed sequences.

What needs to happen next?

Handy notes that the team still needs to optimize many parameters, such as transition temperature and switching speed along with the magnitude of the change in electrical resistance. By determining the underlying principles of the MIT in β’-CuxV2O5 as a prototype material within an expansive field of candidates, however, the team has identified certain design motifs and tunable chemical parameters that ultimately prove useful in the design of future neuromorphic computing materials.

“This discovery is very exciting because it provides fertile ground for the development of new design principles for tuning materials properties and also suggests exciting new approaches to researchers in the field for thinking about energy efficient electronic instabilities,” Parija says.

“Devices that incorporate neuromorphic computing promise improved energy efficiency that silicon-based computing has yet to deliver, as well as performance improvements in computing challenges like pattern recognition—tasks that the human brain is especially well-equipped to tackle. The materials and mechanisms we describe in this work bring us one step closer to realizing neuromorphic computing and in turn actualizing all of the societal benefits and overall promise that comes with it.”

Researchers contributed from Texas A&M, Lawrence Berkeley National Laboratory, the University at Buffalo, Binghamton University, and Texas A&M University at Qatar while also relying on work performed at Berkeley Lab’s The Molecular Foundry and the Advanced Light Source (ALS), the Advanced Photon Source (APS) at Argonne National Laboratory, and the Canadian Light Source.

Primary funding came from the National Science Foundation with additional support from a Texas A&M X-Grant and the Qatar National Research Fund.

Source: Texas A&M University