A new keyboard tool makes it easier for blind internet users or those who have low vision to quickly access options on popular websites.

Browsing through offerings on Airbnb, for instance, means clicking on rows of photos to compare options from prospective hosts. This kind of table-based navigation is increasingly common, but can be tedious or impossible for people who are blind or have low vision.

“Rather than having to browse linearly through all the options, our tool lets people learn the structure of the site and then go right there…”

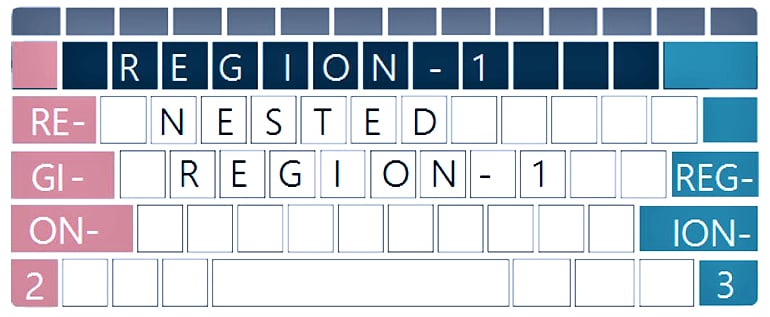

The new approach uses the keyboard as a two-dimensional way to access tables, maps, and nested lists and lets blind and low-vision users navigate sites more successfully than screen readers alone.

“We’re not trying to replace screen readers, or the things that they do really well,” says senior author Jennifer Mankoff, a professor in the Paul G. Allen School of Computer Science at the University of Washington. “But tables are one place that it’s possible to do better. This study demonstrates that we can use the keyboard to bring tangible, structured information back, and the benefits are enormous.”

The new tool, Spatial Recognition Interaction Techniques, or SPRITEs, maps different parts of the keyboard to areas or functions on the screen.

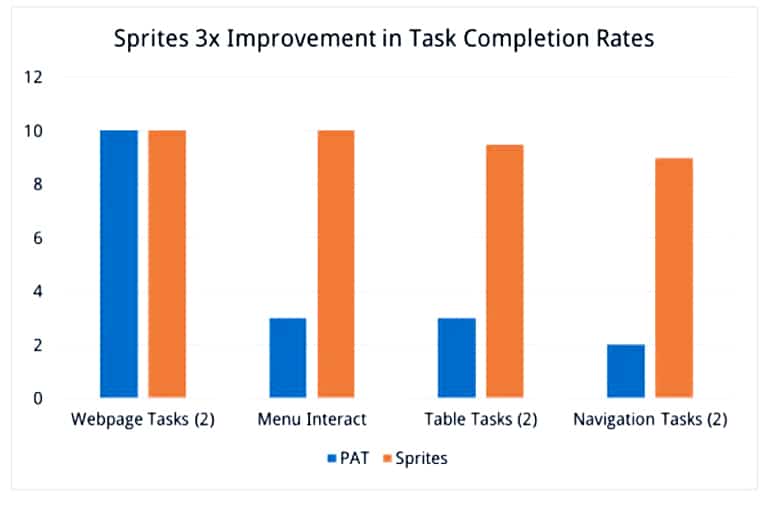

In a trial, 10 people, eight of whom were blind and two with low vision, were asked to complete a series of tasks using their favorite screen reader technology, and then using that technology plus SPRITEs. After a 15-minute tutorial, three times as many participants were able to complete spatial web-browsing tasks within the given time limit using SPRITEs, even though all were experienced with screen readers.

Users press keys to prompt the screen reader to move to certain parts of the website. For instance, number keys, along the top of the keyboard, map to menu buttons. Double-clicking on a number opens that menu item’s submenu, and then the top row of letters lets the user select each item in the submenu. For tables and maps, the keys on the outside edge of the keyboard act like coordinates that let the user navigate to different areas of the two-dimensional feature.

For example, tapping a number key might open an icon for each Airbnb menu option. Then tapping the letter “u” could read out the entry that says whether this host will accept pets. (The Airbnb example illustrates how the system could work; the system’s current implementation is confined to wiki-style webpages.)

“Rather than having to browse linearly through all the options, our tool lets people learn the structure of the site and then go right there,” Mankoff says. “You can learn which part of the keyboard you need to jump right down and check, say, whether dogs are allowed.”

Most of the test participants couldn’t complete a task such as find an item in a submenu or find specific information in a table using their favorite screen reader, but could complete it using SPRITEs.

“A lot more people were able to understand the structure of the web page if we gave them a tactile feedback,” says coauthor Rushil Khurana, a doctoral student at Carnegie Mellon University who conducted the tests in Pittsburgh. “We’re not trying to replace the screen reader, we’re trying to work in conjunction with it.”

For straightforward text-based tasks such as finding a given section header, counting headings in a page, or finding a specific word, participants were able to complete them successfully using either tool.

SPRITEs is one of a suite of tools that Mankoff’s group is developing to help visually impaired users navigate items on a two-dimensional screen. An ethnographic study in 2016 led by doctoral student Mark Baldwin and faculty member Gillian Hayes, both at the University of California, Irvine, observed about a dozen students over four months while they learned to use accessible computing tools, in order to find areas for improvement in screen reading technology.

Device plays sounds to let blind people ‘see’

Now that the team has developed and tested SPRITEs, it plans to make the system more robust for any website and then add it to WebAnywhere, a free, online screen reader. Adding SPRITEs would let users navigate with their keyboard while using the WebAnywhere plugin to read information displayed on a web page. The team also plans to develop a similar technique that would augment screen-reading technology on mobile devices.

“We hope to deploy something that will make a difference in people’s lives,” Mankoff says.

The researchers will present the tool this week at the CHI 2018 conference in Montreal.

Other coauthors are from Carnegie Mellon University. The US Department of Health and Human Services funded the research.

Source: University of Washington