A new system called BrainNet lets three people play a Tetris-like game using a brain-to-brain interface.

This is the first demonstration of two things: a brain-to-brain network of more than two people, and a person being able to both receive and send information to others using only their brain.

“Humans are social beings who communicate with each other to cooperate and solve problems that none of us can solve on our own,” says corresponding author Rajesh Rao, a professor in the Paul G. Allen School of Computer Science & Engineering and a co-director of the Center for Neurotechnology at the University of Washington.

“We wanted to know if a group of people could collaborate using only their brains. That’s how we came up with the idea of BrainNet: where two people help a third person solve a task.”

How to play the game

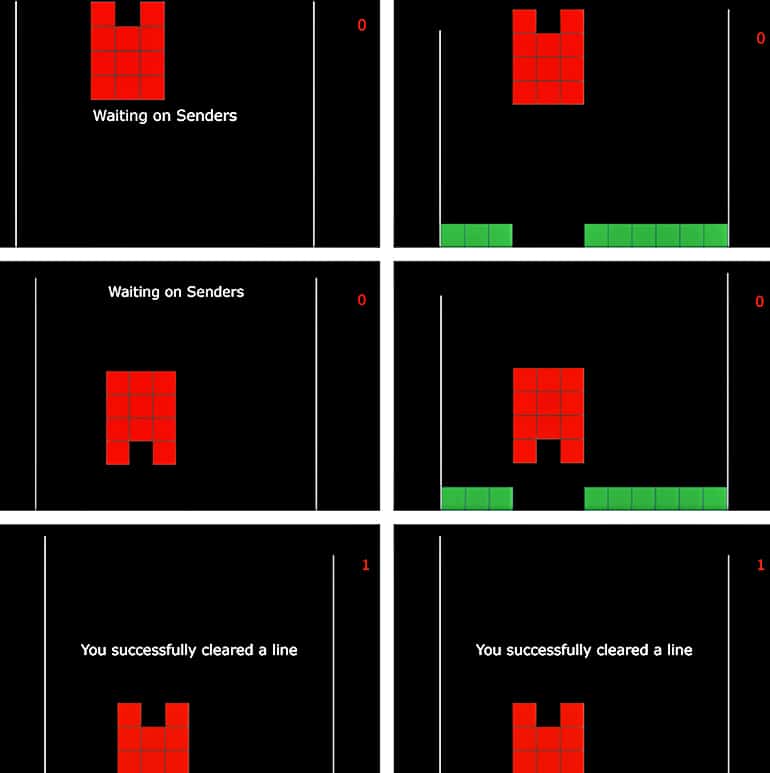

As in Tetris, the game shows a block at the top of the screen and a line that needs to be completed at the bottom. Two people, the Senders, can see both the block and the line but can’t control the game. The third person, the Receiver, can see only the block but can tell the game whether to rotate the block to successfully complete the line.

Each Sender decides whether the block needs to be rotated and then passes that information from their brain, through the internet, and to the brain of the Receiver. Then the Receiver processes that information and sends a command—to rotate or not rotate the block—to the game directly from their brain, hopefully completing and clearing the line.

The team asked five groups of participants to play 16 rounds of the game. For each group, all three participants were in different rooms and couldn’t see, hear, or speak to one another.

The Senders each could see the game displayed on a computer screen. The screen also showed the word “Yes” on one side and the word “No” on the other side. Beneath the “Yes” option, an LED flashed 17 times per second. Beneath the “No” option, an LED flashed 15 times a second.

“Once the Sender makes a decision about whether to rotate the block, they send ‘Yes’ or ‘No’ to the Receiver’s brain by concentrating on the corresponding light,” says first author Linxing Preston Jiang, a student in the Allen School’s combined bachelor’s/master’s degree program.

The tech behind BrainNet

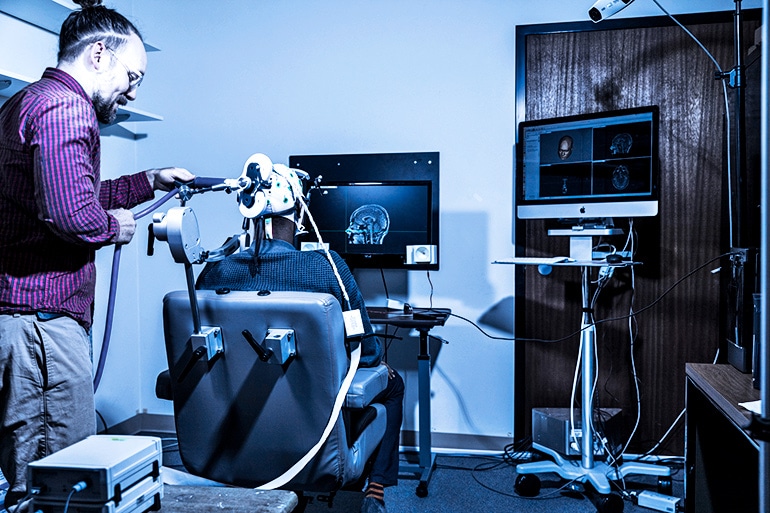

The Senders wore electroencephalography caps that picked up electrical activity in their brains. The lights’ different flashing patterns trigger unique types of activity in the brain, which the caps can pick up.

As the Senders stared at the light for their corresponding selection, the cap picked up those signals, and the computer provided real-time feedback by displaying a cursor on the screen that moved toward their desired choice. The selections were then translated into a “Yes” or “No” answer that could be sent over the internet to the Receiver.

“To deliver the message to the Receiver, we used a cable that ends with a wand that looks like a tiny racket behind the Receiver’s head. This coil stimulates the part of the brain that translates signals from the eyes,” says coauthor Andrea Stocco, an assistant professor in the psychology department and the Institute for Learning & Brain Sciences.

“We essentially ‘trick’ the neurons in the back of the brain to spread around the message that they have received signals from the eyes. Then participants have the sensation that bright arcs or objects suddenly appear in front of their eyes.”

If the answer was, “Yes, rotate the block,” then the Receiver would see the bright flash. If the answer was “No,” then the Receiver wouldn’t see anything. The Receiver received input from both Senders before making a decision about whether to rotate the block. Because the Receiver also wore an electroencephalography cap, they used the same method as the Senders to select yes or no.

‘Good’ and ‘bad’ teammates

The Senders got a chance to review the Receiver’s decision and send corrections if they disagreed. Then, once the Receiver sent a second decision, everyone in the group found out if they cleared the line. On average, each group successfully cleared the line 81 percent of the time, or for 13 out of 16 trials.

The researchers wanted to know if the Receiver would learn over time to trust one Sender over the other based on their reliability. The team purposely picked one of the Senders to be a “bad Sender” and flipped their responses in 10 out of the 16 trials—so that a “Yes, rotate the block” suggestion would be given to the Receiver as “No, don’t rotate the block,” and vice versa. Over time, the Receiver switched from being relatively neutral about both Senders to strongly preferring the information from the “good Sender.”

The team hopes that these results pave the way for future brain-to-brain interfaces that allow people to collaborate to solve tough problems that one brain alone couldn’t solve.

The researchers also believe this is an appropriate time to start to have a larger conversation about the ethics of this kind of brain augmentation research and developing protocols to ensure that people’s privacy is respected as the technology improves. The group is working with the Neuroethics team at the Center for Neurotechnology to address these types of issues.

“But for now, this is just a baby step. Our equipment is still expensive and very bulky and the task is a game,” Rao says. “We’re in the ‘Kitty Hawk’ days of brain interface technologies: We’re just getting off the ground.”

The research appears in Scientific Reports.

The National Science Foundation, a W.M. Keck Foundation Award, and a Levinson Emerging Scholars Award funded the research.

Source: University of Washington