Researchers have developed a new way to find out how the brain singles out specific sounds in distracting settings, non-invasively mapping sustained auditory selective attention in the human brain.

The study lays crucial groundwork to track deficits in auditory attention due to aging, disease, or brain trauma and to create clinical interventions, like behavioral training, to potentially correct or prevent hearing issues.

“Deficits in auditory selective attention can happen for many reasons—concussion, stroke, autism or even healthy aging. They are also associated with social isolation, depression, cognitive dysfunction and lower work force participation. Now, we have a clearer understanding of the cognitive and neural mechanisms responsible for how the brain can select what to listen to,” says Lori Holt, professor of psychology in the Dietrich College of Humanities and Social Sciences and a faculty member of the Center for the Neural Basis of Cognition (CNBC) at Carnegie Mellon University.

To determine how the brain can listen out for important information in different acoustic frequency ranges—similar to paying attention to the treble or bass in a music recording—eight adults listened to one series of short tone melodies and ignored another distracting one, responding when they heard a melody repeat.

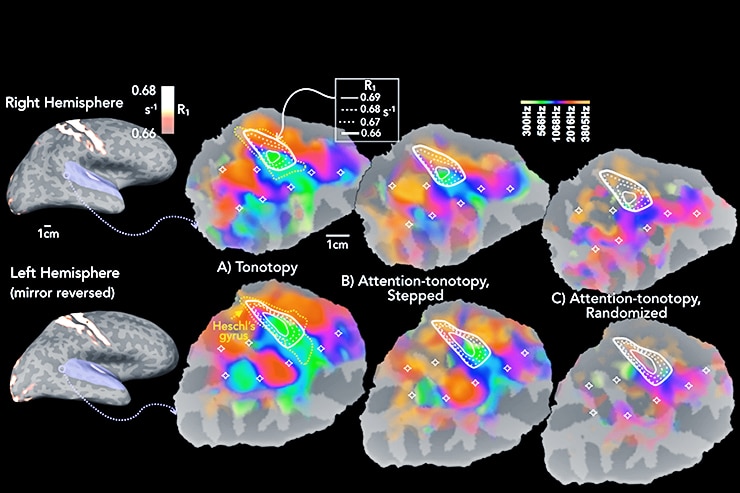

To understand how paying attention to the melodies changed brain activation, the researchers took advantage of a key way that sound information is laid out across the surface, or cortex, of the brain.

The cortex contains many “tonotopic” maps of auditory frequency, where each map represents frequency a little like an old radio display, with low frequencies on one end, going to high on the other. These maps are put together like pieces of a puzzle in the top part of the brain’s temporal lobes.

When people in the MRI scanner listened to the melodies at different frequencies, the parts of the maps tuned to these frequencies were activated. What was surprising was that just paying attention to these frequencies activated the brain in a very similar way—not only in a few core areas, but also over much of the cortex where sound information is known to arrive and be processed.

The researchers then used a new high-resolution brain imaging technique called multiparameter mapping to see how the activation to hearing or just paying attention to different frequencies related to another key brain feature, or myelination. Myelin is the “electrical insulation” of the brain, and brain regions differ a lot in how much myelin insulation is wrapped around the parts of neurons that transmit information.

In comparing the frequency and myelin maps, the researchers found that they were very related in specific areas: if there was an increase in the amount of myelin across a small patch of cortex, there was also an increase in how strong a preference neurons had for particular frequencies.

Pitch discovery could lead to better cochlear implants

“This was an exciting finding because it potentially revealed some shared ‘fault lines’ in the auditory brain,” says Frederic Dick, professor of auditory cognitive neuroscience at Birkbeck College and University College London.

“Like earth scientists who try to understand what combination of soil, water, and air conditions makes some land better for growing a certain crop, as neuroscientists we can start to understand how subtle differences in the brain’s functional and structural architecture might make some regions more ‘fertile ground’ for learning new information like language or music,” Dick says.

The researchers report their findings in the Journal of Neuroscience.

Carnegie Mellon’s Matt Lehet and Tim Keller, University College London’s Martina F. Callaghan, and Martin Sereno of San Diego State University also participated in the research.

Carnegie Mellon alumus Jonathan Rothberg funded this work.

Source: Carnegie Mellon University