Using a brain-computer interface, a person with ALS who lost the ability to speak created text on a computer at rates that approach the speed of regular speech just by thinking of saying the words.

In a new study published in Nature, the researchers describe using sensors implanted in areas of the cerebral cortex associated with speech to accurately turn the brain activity of the patient.

The clinical trial participant—who can no longer use the muscles of her lips, tongue, larynx, and jaws to enunciate units of sound clearly—was able to generate 62 words per minute on a computer screen simply by attempting to speak.

“It’s a big advance toward restoring rapid communication to people with paralysis who can’t speak.”

This is more than three times as fast as the previous record for assisted communication using implanted brain-computer interfaces (BCIs) and begins to approach the roughly 160-word-per-minute rate of natural conversation among English speakers.

The study shows that it’s possible to use neural activity to decode attempted speaking movements with better speed and a larger vocabulary than what was previously possible.

“This is a scientific proof of concept, not an actual device people can use in everyday life,” says Frank Willett, one of the study’s lead authors and a research scientist at Stanford University and the Howard Hughes Medical Institute. “It’s a big advance toward restoring rapid communication to people with paralysis who can’t speak.”

The work is part of the BrainGate clinical trial, directed by Leigh Hochberg, a critical care neurologist and a professor at Brown University’s School of Engineering who is affiliated with the University’s Carney Institute for Brain Science. Jaimie Henderson, a professor of neurosurgery at Stanford, and Krishna Shenoy, a Stanford professor and HHMI investigator, who died before the study was published, are also authors on the study.

The study is the latest in a series of advances in brain-computer interfaces made by the BrainGate consortium, which along with other work using BCIs has been developing systems that enable people to generate text through direct brain control for several years. Previous incarnations have involved trial participants thinking about the motions involved in pointing to and clicking letters on a virtual keyboard, and, in 2021, converting a paralyzed person’s imagined handwriting onto text on a screen, attaining a speed of 18 words per minute.

“With credit and thanks to the extraordinary people with tetraplegia who enroll in the BrainGate clinical trials and other BCI research, we continue to see the incredible potential of implanted brain-computer interfaces to restore communication and mobility,” says Hochberg, who in addition to his roles at Brown is a neurologist at Massachusetts General Hospital and director of the V.A. Rehabilitation Research and Development Center for Neurorestoration and Neurotechnology in Providence.

One of those extraordinary people is Pat Bennett, who having learned about the 2021 work, volunteered for the BrainGate clinical trial that year.

Bennett, now 68, is a former human resources director and daily jogger who was diagnosed with ALS (amyotrophic lateral sclerosis) in 2012. For Bennett, the progressive neurodegenerative disease stole her ability to speak intelligibly. While Bennett’s brain can still formulate directions for generating units of sound called phonemes, her muscles can’t carry out the commands.

“For those who are nonverbal, this means they can stay connected to the bigger world, perhaps continue to work, maintain friends and family relationships.”

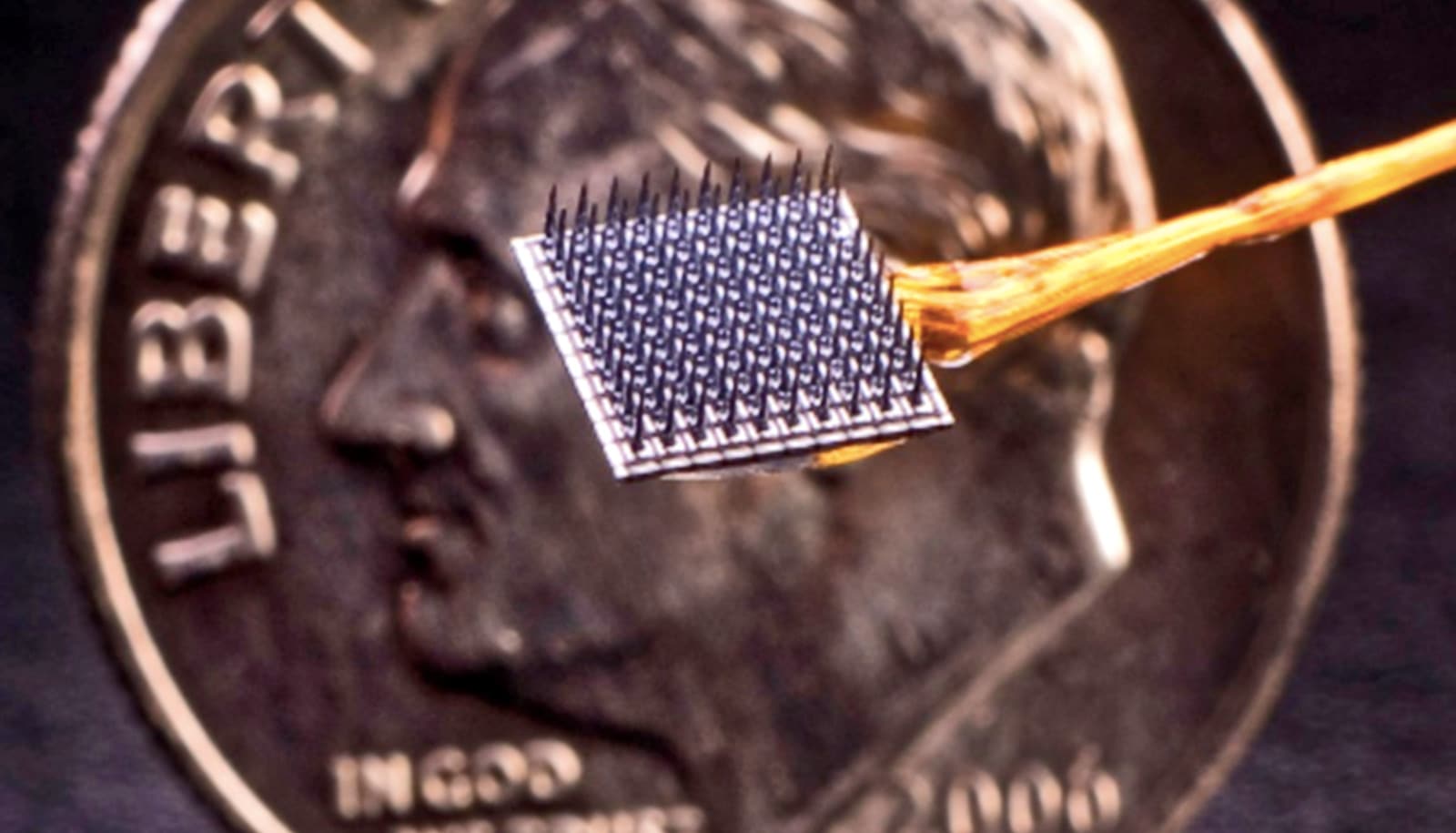

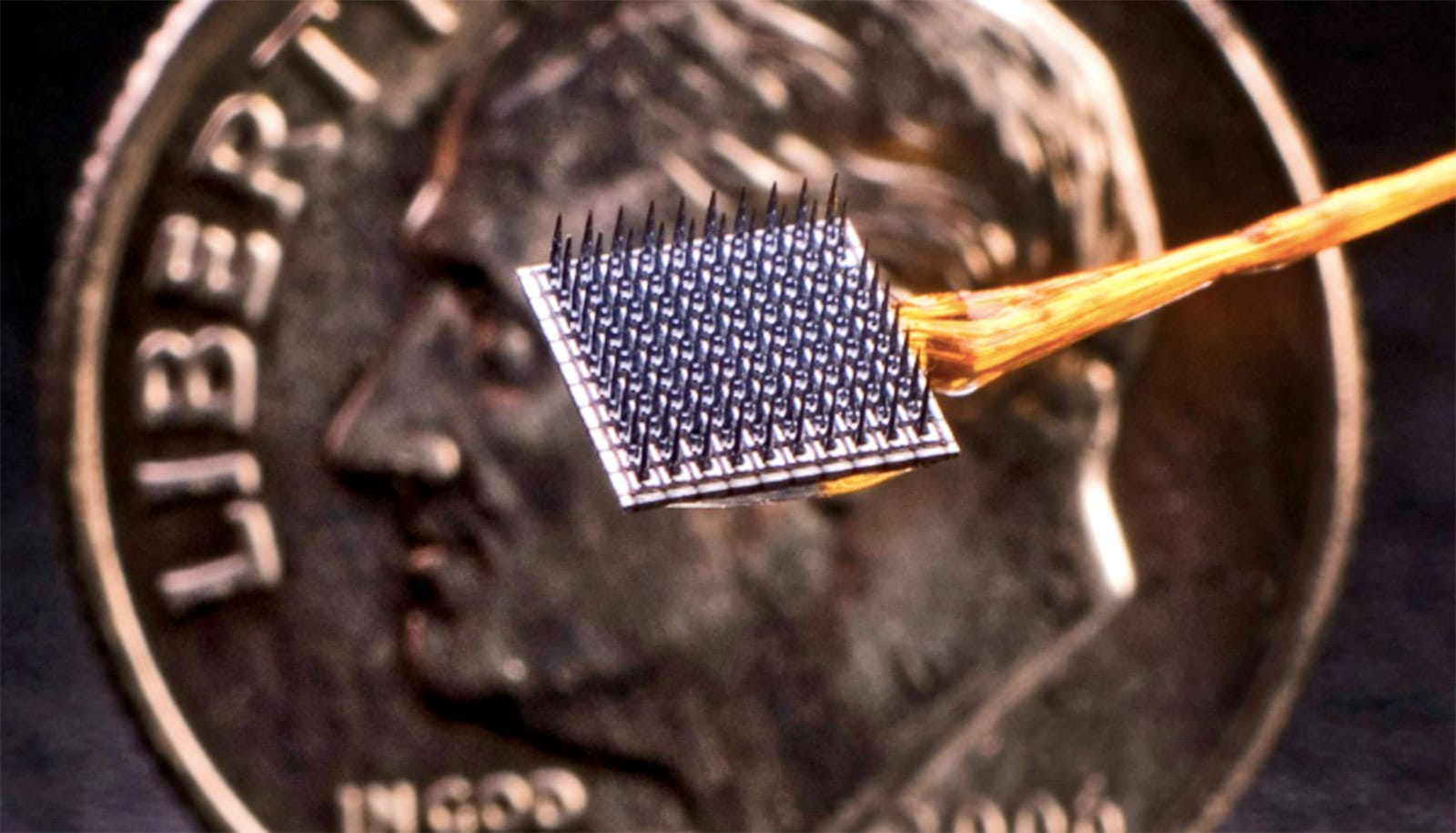

As part of the clinical trial, Henderson, a neurosurgeon, placed two pairs of tiny electrodes about the size of a baby aspirin in two separate speech-related regions of Bennett’s cerebral cortex. An artificial-intelligence algorithm receives and decodes electronic information emanating from Bennett’s brain, eventually teaching itself to distinguish the distinct brain activity associated with her attempts to formulate each of the phonemes—such as sh sound—that are the building blocks of speech and compose spoken English.

The decoder then feeds its best guess concerning the sequence of Bennett’s attempted phonemes into a language model, which acts essentially as a sophisticated autocorrect system. This system then converts the streams of phonemes into the sequence of words they represent, which are then displayed on the computer screen.

To teach the algorithm to recognize which brain-activity patterns were associated with which phonemes, Bennett engaged in about 25 training sessions, each lasting about four hours, where she attempted to repeat sentences chosen randomly from a large data set.

As part of these sessions, the research team also analyzed the system’s accuracy. They found that when the sentences and the word-assembling language model were restricted to a 50-word vocabulary, the translation system’s error rate was 9.1%. When vocabulary was expanded to 125,000 words, large enough to compose almost anything someone would want to say, the error rate rose to 23.8%.

The researchers say the figures are far from perfect but represent a giant step forward from prior results using BCIs. They are hopeful of what the system could one day achieve—as is Bennett.

“For those who are nonverbal, this means they can stay connected to the bigger world, perhaps continue to work, maintain friends and family relationships,” Bennett wrote via email. “Imagine how different conducting everyday activities like shopping, attending appointments, ordering food, going into a bank, talking on a phone, expressing love or appreciation—even arguing—will be when nonverbal people can communicate their thoughts in real time.”

The National Institutes of Health, the US Department of Veterans Affairs, Howard Hughes Medical Institute, the Simons Foundation and L. and P. Garlick funded the work.

Source: Brown University