Teaching physics to neural networks lets those networks better adapt to chaos within their environment, researchers report.

The work has implications for improved artificial intelligence (AI) applications ranging from medical diagnostics to automated drone piloting.

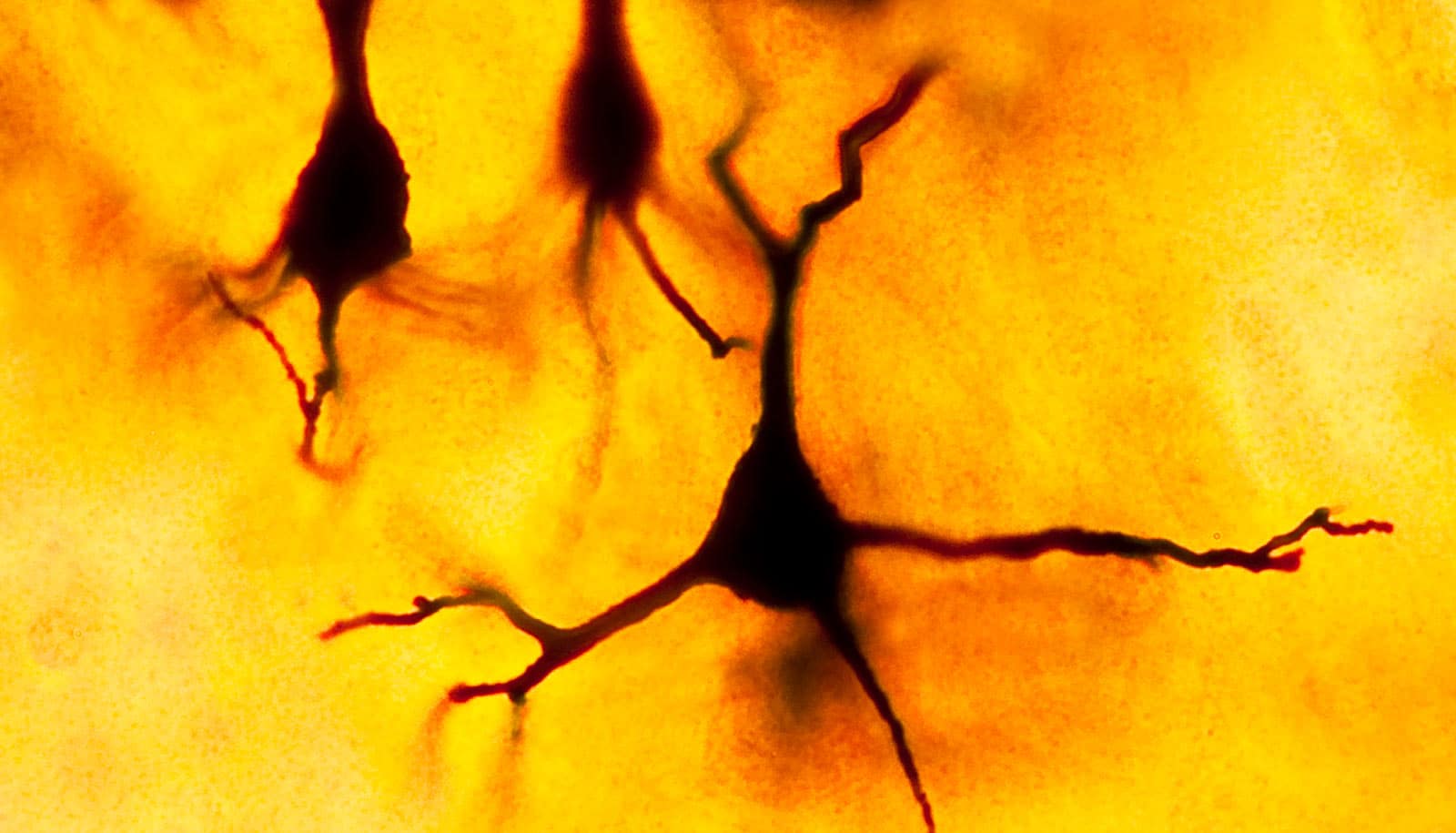

Neural networks are an advanced type of AI loosely based on the way that our brains work. Our natural neurons exchange electrical impulses according to the strengths of their connections.

Artificial neural networks mimic this behavior by adjusting numerical weights and biases during training sessions to minimize the difference between their actual and desired outputs.

For example, a neural network can be trained to identify photos of dogs by sifting through a large number of photos, making a guess about whether the photo is of a dog, seeing how far off it is, and then adjusting its weights and biases until they are closer to reality.

The drawback to this network training is something called “chaos blindness”—an inability to predict or respond to chaos in a system. Conventional AI is chaos blind.

Now, researchers have found that incorporating a Hamiltonian function into neural networks better enables them to “see” chaos within a system and adapt accordingly.

Simply put, the Hamiltonian embodies the complete information about a dynamic physical system—the total amount of all the energies present, kinetic and potential.

Picture a swinging pendulum, moving back and forth in space over time. Now look at a snapshot of that pendulum. The snapshot cannot tell you where that pendulum is in its arc or where it is going next.

Conventional neural networks operate from a snapshot of the pendulum. Neural networks familiar with the Hamiltonian flow understand the entirety of the pendulum’s movement—where it is, where it will or could be, and the energies involved in its movement.

In a proof-of-concept project, the researchers incorporated Hamiltonian structure into neural networks, then applied them to a known model of stellar and molecular dynamics called the Hénon-Heiles model. The Hamiltonian network accurately predicted the dynamics of the system, even as it moved between order and chaos.

“The Hamiltonian is really the ‘special sauce’ that gives neural networks the ability to learn order and chaos,” says corresponding author John Lindner, a visiting researcher at North Carolina State University’s Nonlinear Artificial Intelligence Laboratory (NAIL) and professor of physics at the College of Wooster.

“With the Hamiltonian, the neural network understands underlying dynamics in a way that a conventional network cannot. This is a first step toward physics-savvy neural networks that could help us solve hard problems.”

The work appears in Physical Review E. Partial support for the work came from the Office of Naval Research.

Source: NC State