Engineers have created a program that lets people use their thoughts to control video games.

The innovation is part of research into brain-computer interfaces to help improve the lives of people with motor disabilities.

The researchers incorporated machine learning capabilities with their brain-computer interface, making it a one-size-fits-all solution.

Typically, these devices require extensive calibration for each user—every brain is different, both for healthy and disabled users—and that has been a major hurdle to mainstream adoption. This new solution can quickly understand the needs of an individual subject and self-calibrate through repetition. That means multiple patients could use the device without needing to tune it to the individual.

“When we think about this in a clinical setting, this technology will make it so we won’t need a specialized team to do this calibration process, which is long and tedious,” says Satyam Kumar, a graduate student in the lab of José del R. Millán, a professor in the University of Texas at Austin’s Cockrell School of Engineering’s electrical and computer engineering Department and Dell Medical School’s neurology department. “It will be much faster to move from patient to patient.”

The research on the calibration-free interface appears in PNAS Nexus.

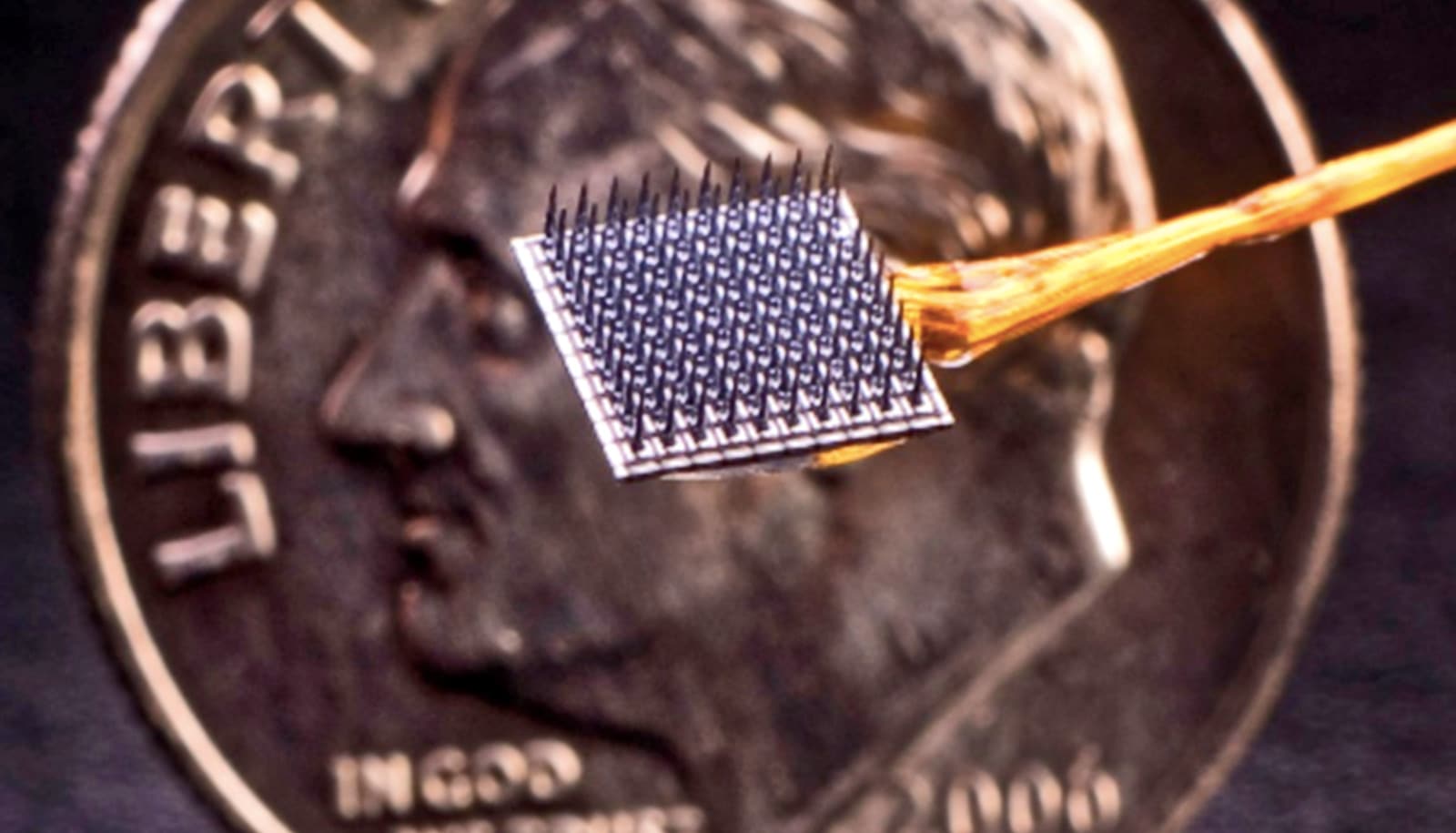

The subjects wear a cap packed with electrodes that is hooked up to a computer. The electrodes gather data by measuring electrical signals from the brain, and the decoder interprets that information and translates it into game action.

Millán’s work on brain-computer interfaces helps users guide and strengthen their neural plasticity, the ability of the brain to change, grow, and reorganize over time. These experiments are designed to improve brain function for patients and use the devices controlled by brain-computer interfaces to make their lives easier.

In this case, the actions were twofold: the car racing game, and a simpler task of balancing the left and right sides of a digital bar. An expert was trained to develop a “decoder” for the simpler bar task that makes it possible for the interface to translate brain waves into commands. The decoder serves as a base for the other users and is the key to avoiding the long calibration process.

The decoder worked well enough that subjects trained simultaneously for the bar game and the more complicated car racing game, which required thinking several steps ahead to make turns.

The researchers call this work foundational, in that it sets the stage for further brain-computer interface innovation. This project used 18 subjects with no motor impairments. Eventually, as they continue down this road, they will test this on people with motor impairments to apply it to larger groups in clinical settings.

“On the one hand, we want to translate the BCI to the clinical realm to help people with disabilities; on the other, we need to improve our technology to make it easier to use so that the impact for these people with disabilities is stronger,” Millán says.

On the side of translating the research, Millán and his team continue to work on a wheelchair that users can drive with the brain-computer interface. At the South by Southwest Conference and Festivals this month, the researchers showed off another potential use of the technology, controlling two rehabilitation robots for the hand and arm. This was not part of the new paper but a sign of where this technology could go in the future. Several people volunteered and succeeded in operating the brain-controlled robots within minutes.

“The point of this technology is to help people, help them in their everyday lives,” Millán says. “We’ll continue down this path wherever it takes us in the pursuit of helping people.”

Source: University of Texas at Austin