A new tiny, self-driving robot powered only by surrounding light or radio waves can run indefinitely on harvested power.

Small mobile robots carrying sensors could perform tasks like catching gas leaks or tracking warehouse inventory. But moving robots demands a lot of energy, and batteries, the typical power source, limit lifetime and raise environmental concerns.

Researchers have explored various alternatives: affixing sensors to insects, keeping charging mats nearby, or powering the robots with lasers. Each has drawbacks. Insects roam. Chargers limit range. Lasers can burn people’s eyes.

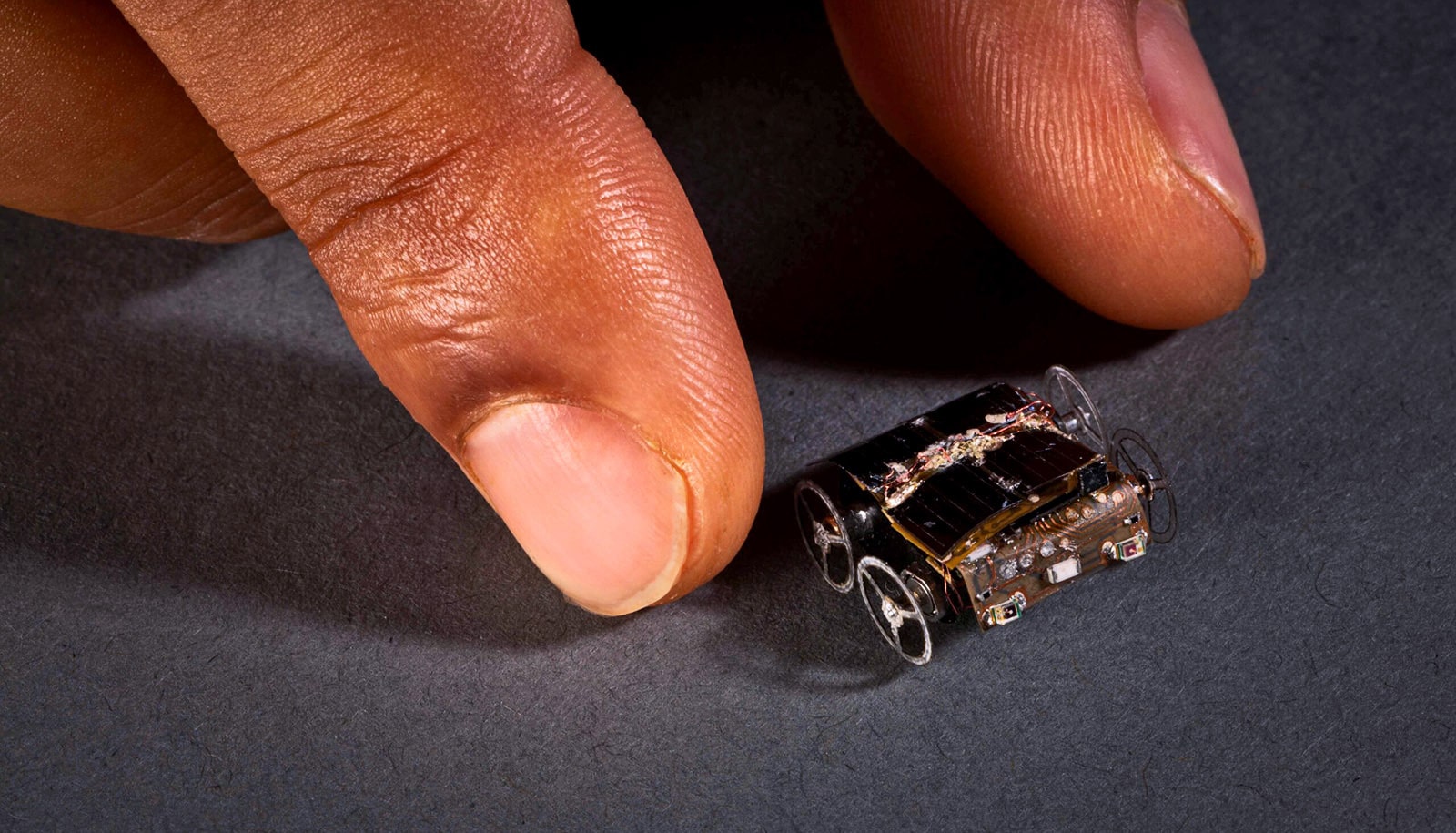

Researchers have now created MilliMobile, a self-driving robot that is about the size of a penny, weighs as much as a raisin, and can move about the length of a bus (30 feet, or 10 meters) in an hour even on a cloudy day.

The robot can drive on surfaces such as concrete or packed soil and carry nearly three times its own weight in equipment like a camera or sensors. It uses a light sensor to move automatically toward light sources.

“We took inspiration from ‘intermittent computing,’ which breaks complex programs into small steps, so a device with very limited power can work incrementally, as energy is available,” says co-lead author Kyle Johnson, a doctoral student in the Paul G. Allen School of Computer Science & Engineering at the University of Washington.

“With MilliMobile, we applied this concept to motion. We reduced the robot’s size and weight so it takes only a small amount of energy to move. And, similar to an animal taking steps, our robot moves in discrete increments, using small pulses of energy to turn its wheels.”

The team tested MilliMobile both indoors and outdoors, in environments such as parks, an indoor hydroponic farm, and an office. Even in very low light situations—for instance, powered only by the lights under a kitchen counter—the robots are still able to inch along, though much slower.

Running continuously, even at that pace, opens new abilities for a swarm of robots deployed in areas where other sensors have trouble generating nuanced data.

The robots are also able to steer themselves, navigating with onboard sensors and tiny computing chips. To demonstrate this, the team programmed the robots to use their onboard light sensors to move towards a light source.

“‘Internet of Things’ sensors are usually fixed in specific locations,” says co-lead author Zachary Englhardt, a doctoral student in the Allen School. “Our work crosses domains to create robotic sensors that can sample data at multiple points throughout a space to create a more detailed view of its environment, whether that’s a smart farm where the robots are tracking humidity and soil moisture, or a factory where they’re seeking out electromagnetic noise to find equipment malfunctions.”

Researchers have outfitted MilliMobile with light, temperature, and humidity sensors as well as with Bluetooth, letting it transmit data over 650 feet (200 meters). In the future, they plan to add other sensors and improve data-sharing among swarms of these robots.

The team will present its research October 2 at the ACM MobiCom 2023 conference in Madrid, Spain.

Vicente Arroyos, a doctoral student in the Allen School, is a co-lead author of the study. Dennis Yin, who completed this work as undergraduate in electrical and computer engineering, and Shwetak Patel, a professor in the Allen School and in electrical and computer engineering, are coauthors, and Vikram Iyer, assistant professor in the Allen School, is the study’s senior author.

The research received funding from an Amazon Research Award, a Google Research Scholar award, the National Science Foundation Graduate Research Fellowship Program, the National GEM Consortium, the Washington NASA Space Grant Consortium, the Pastry-Powered T(o)uring Machine Endowed Fellowship, and the SPEEA ACE fellowship program.

Source: University of Washington