As robots assume more roles in the world, a new analysis reviewed research on robot rights, concluding that granting rights to robots is a bad idea.

Philosophers and legal scholars have explored significant aspects of the moral and legal status of robots, with some advocating for giving robots rights.

The analysis, published in Communications of the ACM, looks to Confucianism to offer an alternative.

“People are worried about the risks of granting rights to robots,” notes Tae Wan Kim, associate professor of business ethics at Carnegie Mellon University’s Tepper School of Business, who conducted the analysis. “Granting rights is not the only way to address the moral status of robots: Envisioning robots as rites bearers—not a rights bearers—could work better.”

Various non-natural entities—such as corporations—are considered people and even assume some Constitutional rights. In addition, humans are not the only species with moral and legal status; in most developed societies, moral and legal considerations preclude researchers from gratuitously using animals for lab experiments.

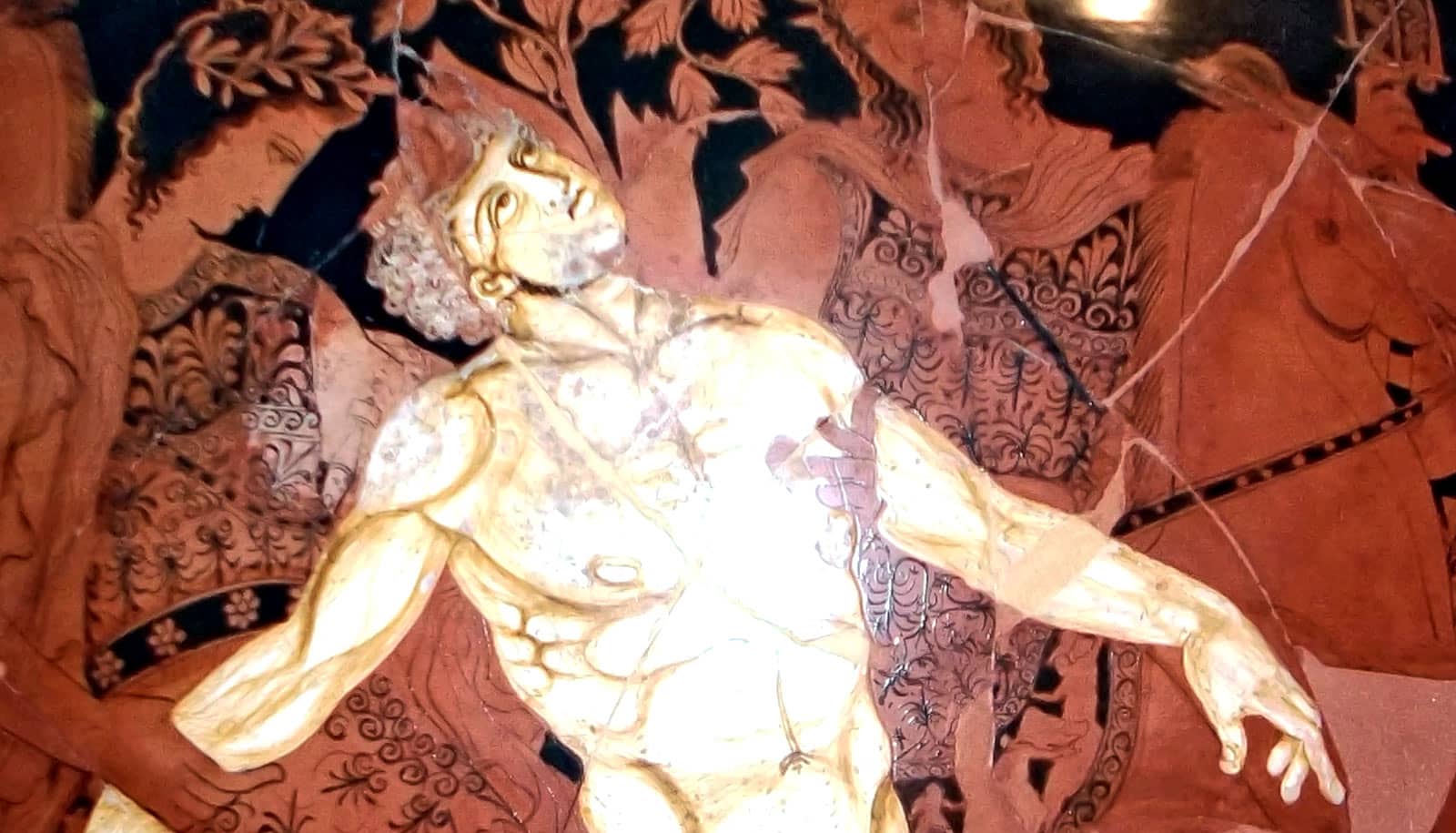

Although many believe that respecting robots should lead to granting them rights, Kim argues for a different approach. Confucianism, an ancient Chinese belief system, focuses on the social value of achieving harmony; individuals are made distinctively human by their ability to conceive of interests not purely in terms of personal self-interest, but in terms that include a relational and a communal self. This, in turn, requires a unique perspective on rites, with people enhancing themselves morally by participating in proper rituals.

When considering robots, Kim suggests that the Confucian alternative of assigning rites—or what he calls role obligations—to robots is more appropriate than giving robots rights. The concept of rights is often adversarial and competitive, and potential conflict between humans and robots is concerning.

“Assigning role obligations to robots encourages teamwork, which triggers an understanding that fulfilling those obligations should be done harmoniously,” explains Kim.

“Artificial intelligence (AI) imitates human intelligence, so for robots to develop as rites bearers, they must be powered by a type of AI that can imitate humans’ capacity to recognize and execute team activities—and a machine can learn that ability in various ways.”

Kim acknowledges that some will question why robots should be treated respectfully in the first place.

“To the extent that we make robots in our image, if we don’t treat them well, as entities capable of participating in rites, we degrade ourselves,” he suggests.

Source: Carnegie Mellon University