A new device called HandMorph could help adults experience what it’s like to interact with the world with hands the size of a child’s.

As an adult, it’s hard to imagine how children experience a world built for grownups. What is it really like for someone lower to the ground, with shorter limbs and smaller hands, to navigate a home, a school, or a park?

Everyone from toy designers and teachers to doctors and parents could benefit from a realistic few minutes in a child’s shoes.

“Does changing bodies change our perspective?”

In a series of research projects, postdoctoral researcher Jun Nishida has explored new technologies that help people experience the point of view of others unlike themselves.

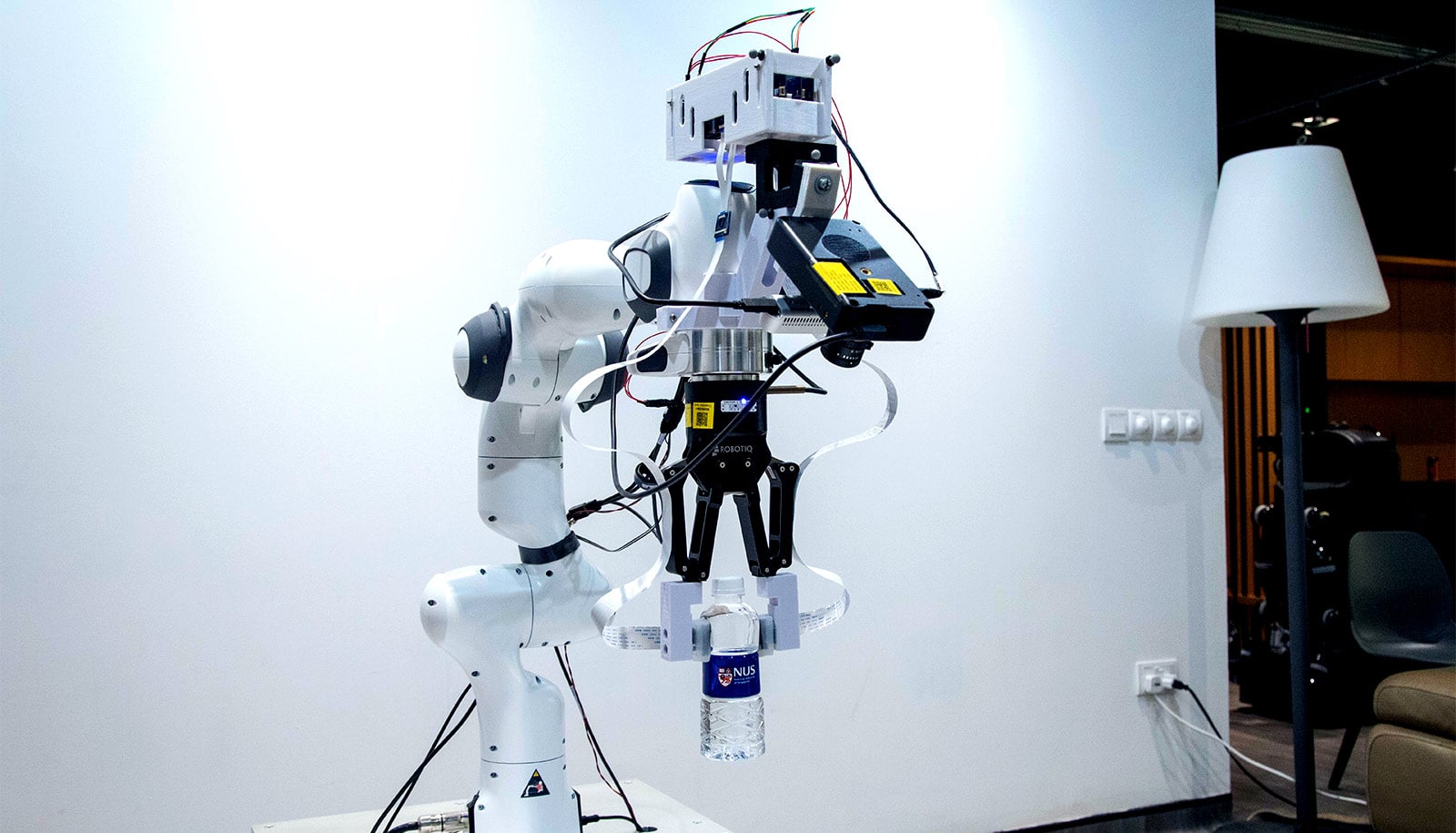

Working in the Human Computer Integration Lab of Pedro Lopes—an assistant professor in the Department of Computer Science at the University of Chicago—Nishida’s HandMorph uses a wearable exoskeleton to simulate the hand of a child, reducing its wearer’s grasp.

The device is the latest Nishida has invented to change people’s perception and body movements, following a custom headset that recreates the visual perspective of a small child and bioSync, a wearable using electrical muscle stimulation that synchronizes the movements of two individuals, even if they are far apart.

“I’m interested in changing the body’s function to have the experience of another person, and how that changes human perception. Does changing bodies change our perspective?” Nishida says.

“These changes can affect the attitude or social relationship between people and could be useful for gaining a kind of embodied knowledge or increased empathy towards children or people different from yourself.”

HandMorph presents a unique spin on wearable technology, because no computers, electronics, or virtual reality devices are involved. The device is 3D printed with just a few dollars of materials, and slips on to its user’s hand like a glove.

Through tiny mechanical links, the device translates hand movements to an attached small silicone hand and provides realistic haptic feedback. A wire between the glove and the user’s waist adds to the experience by restricting reach to the range of a small child.

“Other researchers have used virtual reality avatars to change people’s perception of their body or environment, and there’s value in that,” says Lopes, whose research focuses on integrating computer interfaces with the human body. “But we wanted to ask a harder question, which is can we change people’s experience while you’re still in the real world, interacting with people and objects around you. That challenges people to navigate their actual space with a smaller hand and see how that feels.”

Yet the researchers found that this simple, user-friendly setup could produce perceptual changes. After some minutes of wearing the HandMorph device and interacting with plastic balls of various sizes, subjects perceived the objects as larger while wearing the glove compared to holding the balls with their ungloved hand. The result showed how quickly a wearable device can alter the brain’s map of body representation, producing altered experience.

The study followed up this experiment with a more practical user study, testing whether people would find HandMorph useful in a design exercise. Subjects were given a toy trumpet and asked to improve its design for small children, molding clay with either the HandMorph glove or using a “spec sheet” of measurements for child hand size. The participants felt more confident in their designs, used the wearable device much more often, and fixed five of the six design flaws in the original toy.

“It appears that feeling the tool and being able to act in real surroundings is much more powerful than being digitally immersed either in VR or computer-assisted design software, which gives you the 3D features of these toys, but not the context,” Lopes says. “When people were designing this object, they got really excited and were more confident that they were making fewer mistakes and that this was going to be usable by a child.”

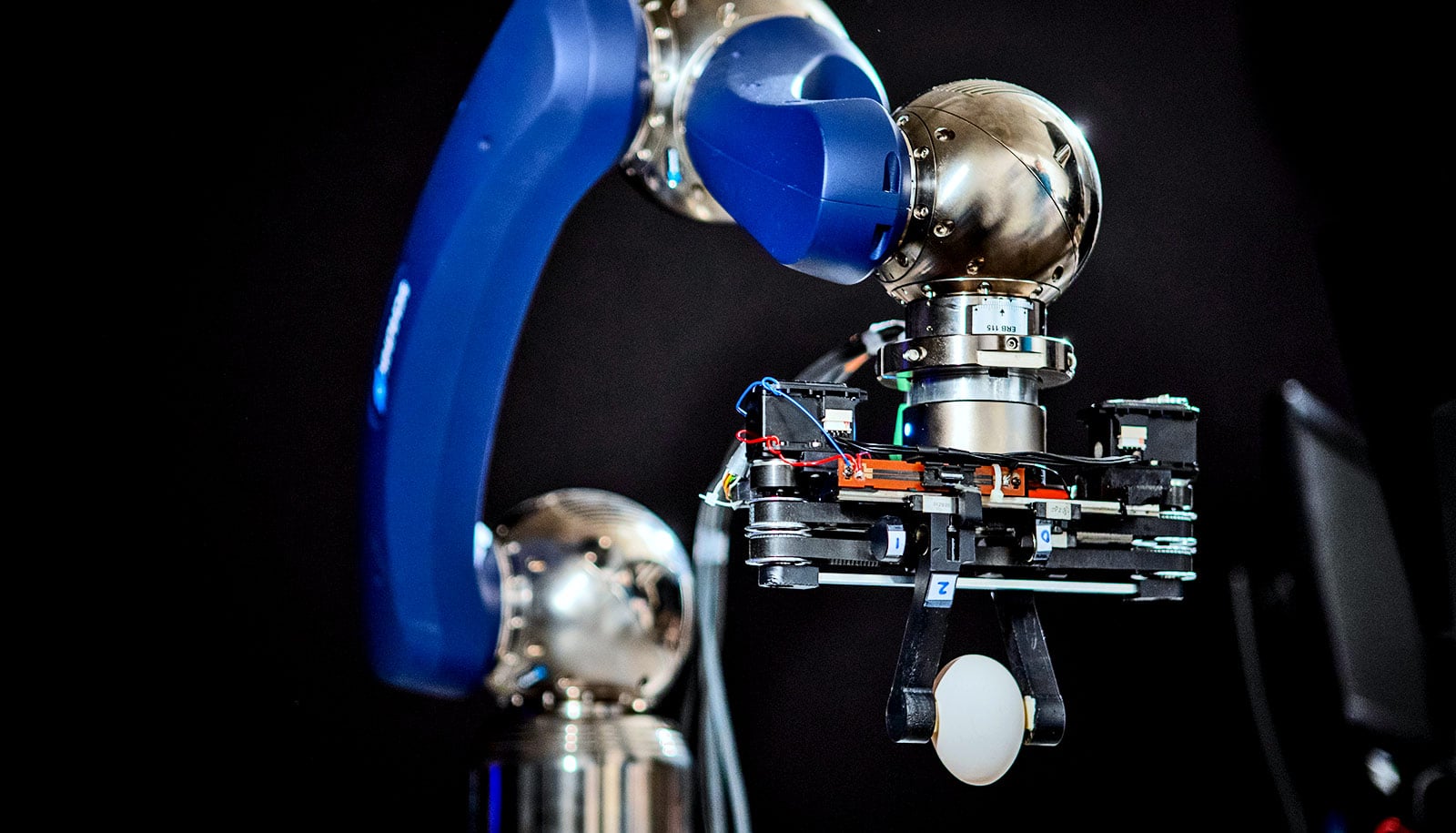

Apart from this design application, the researchers also propose that a similar device could be useful for assisting with surgery or other fine motor tasks, translating normal hand movements into more smaller actions. In the future, they’d like to explore uses of technology that manipulate perception of leg size or step, making their simulation of a child’s perspective and experience even more immersive.

The researchers presented their work at the 2020 ACM Symposium on User Interface Software and Technology.

Source: University of Chicago