New research suggests Siri and other digital helpers could someday learn the algorithms that humans have used for centuries to create and understand metaphorical language.

For example, ask Siri to find a math tutor to help you “grasp” calculus and she’s likely to respond that your request is beyond her abilities. That’s because metaphors like “grasp” are difficult for Apple’s voice-controlled personal assistant to, well, grasp.

Mapping 1,100 years of metaphoric English language, researchers have detected patterns in how English speakers have added figurative word meanings to their vocabulary.

The results, published in the journal Cognitive Psychology, demonstrate how throughout history humans have used language that originally described palpable experiences such as “grasping an object” to describe more intangible concepts such as “grasping an idea.”

“The use of concrete language to talk about abstract ideas may unlock mysteries about how we are able to communicate and conceptualize things we can never see or touch,” says study senior author Mahesh Srinivasan, an assistant professor of psychology at the University of California, Berkeley. “Our results may also pave the way for future advances in artificial intelligence.”

The findings provide the first large-scale evidence that the creation of new metaphorical word meanings is systematic, researchers said. They can also inform efforts to design natural language processing systems like Siri to help them understand creativity in human language.

“Although such systems are capable of understanding many words, they are often tripped up by creative uses of words that go beyond their existing, pre-programmed vocabularies,” says lead author Yang Xu, a postdoctoral researcher in linguistics and cognitive science at UC Berkeley.

“This work brings opportunities toward modeling metaphorical words at a broad scale, ultimately allowing the construction of artificial intelligence systems that are capable of creating and comprehending metaphorical language,” he adds.

How artificial intelligence could teach itself slang

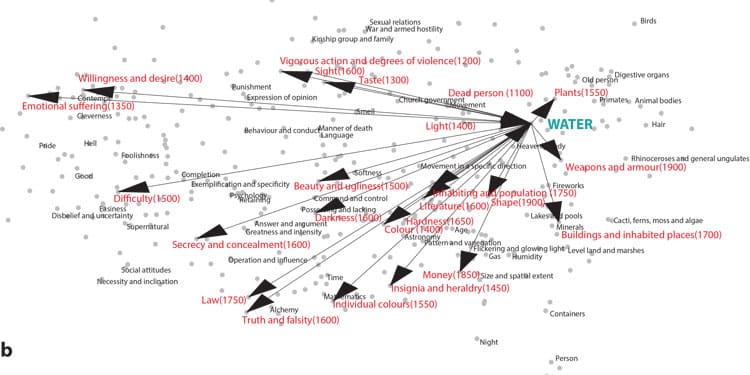

Using the Metaphor Map of English database, researchers examined more than 5,000 examples from the past millennium in which word meanings from one semantic domain, such as “water,” were extended to another semantic domain, such as “mind.”

Researchers called the original semantic domain the “source domain” and the domain that the metaphorical meaning was extended to, the “target domain.”

More than 1,400 online participants were recruited to rate semantic domains such as “water” or “mind” according to the degree to which they were related to the external world (light, plants), animate things (humans, animals), or intense emotions (excitement, fear).

Arm and leg metaphors excite surprising brain region

These ratings were fed into computational models that the researchers had developed to predict which semantic domains had been the sources or targets of metaphorical extension.

In comparing their computational predictions against the actual historical record provided by the Metaphor Map of English, researchers found that their models correctly forecast about 75 percent of recorded metaphorical language mappings over the past millennium.

Furthermore, they found that the degree to which a domain is tied to experience in the external world, such as “grasping a rope,” was the primary predictor of how a word would take on a new metaphorical meaning such as “grasping an idea.”

For example, time and again, researchers found that words associated with textiles, digestive organs, wetness, solidity, and plants were more likely to provide sources for metaphorical extension, while mental and emotional states, such as excitement, pride, and fear were more likely to be the targets of metaphorical extension.

Srinivasan and Xu conducted the study with Lehigh University psychology professor Barbara Malt.

Source: UC Berkeley