Researchers have created a team of free-flying robots that obeys the two rules of the air: don’t collide or undercut each other. They’ve also built autonomous blimps that recognize hand gestures and detect faces.

In the first, five swarm quadcopters zip back and forth in formation, then change their behaviors based on user commands. The trick is to maneuver without smacking into each other or flying underneath another machine. If a robot cuts into the airstream of a higher-flying quadcopter, the lower machine must quickly recover from the turbulent air or risk falling out of the sky.

“Ground robots have had built-in safety ‘bubbles’ around them for a long time to avoid crashing,” says Magnus Egerstedt, the Georgia Tech School of Electrical and Computer Engineering professor who oversees the project. “Our quadcopters must also include a cylindrical ‘do not touch’ area to avoid messing up the airflow for each other. They’re basically wearing virtual top hats.”

As long as the machines avoid flying in the two-foot space below their neighbor, they can swarm freely without a problem. That typically means they dart around each other rather than going low.

‘Top hats’

PhD student Li Wang figured out the size of the “top hat” one afternoon by hovering one copter in the air and sending others back and forth underneath it. Any closer than 0.6 of a meter (or five times the diameter from one rotor to another) and the machines were blasted to the ground. Then he created algorithms to allow them to change formation midflight.

“We figured out the smallest amount of modifications a quadcopter must make to its planned path to achieve the new formation,” says Wang. “Mathematically, that’s what a programmer wants—the smallest deviations from an original flight plan.”

The project is part of Egerstedt and Wang’s overall research, which focuses on easily controlling and interacting with large teams of robots.

Bat drone soars with morphing skeleton and thin skin

“Our skies will become more congested with autonomous machines, whether they’re used for deliveries, agriculture, or search and rescue,” says Egerstedt, who directs Georgia Tech’s Institute for Robotics and Intelligent Machines. “It’s not possible for one person to control dozens or hundreds of robots at a time. That’s why we need machines to figure it out themselves.”

Blimps that can read your face

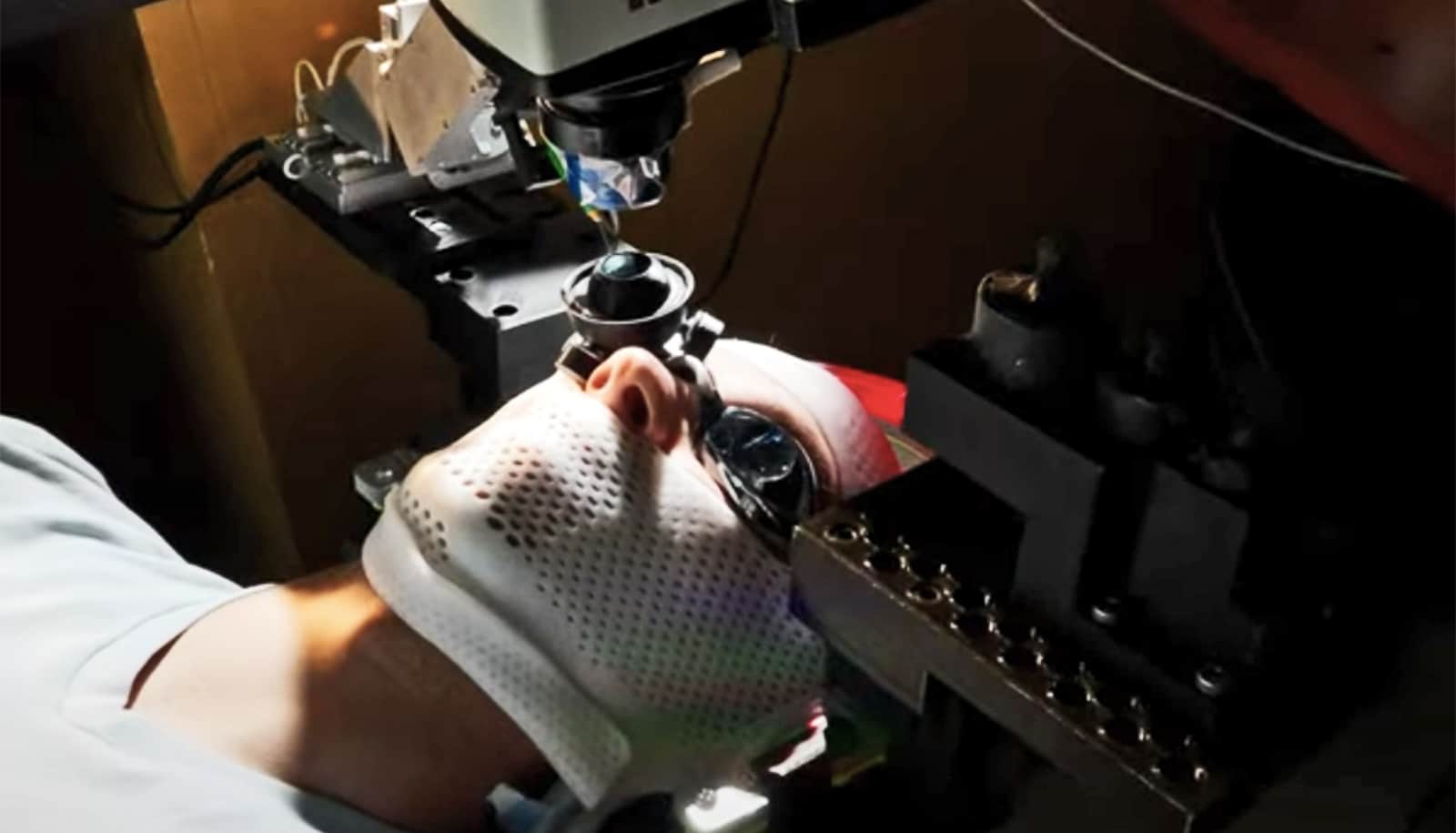

The researchers overseeing the second project, the blimps, 3D-printed a gondola frame that carries sensors and a mini camera. It attaches to either an 18- or 36-inch diameter balloon. The smaller blimp can carry a five-gram payload; the larger one supports 20 grams.

The autonomous blimps detect faces and hands, allowing people to direct the flyers with movements. All the while, the machine gathers information about its human operator, identifying everything from hesitant glares to eager smiles. The goal is to better understand how people interact with flying robots.

“Roboticists and psychologists have learned many things about how humans relate to robots on the ground, but we haven’t created techniques to study how we react to flying machines,” says Fumin Zhang, the associate professor leading the blimp project. “Flying a regular drone close to people presents a host of issues. But people are much more likely to approach and interact with a slow-moving blimp that looks like a toy.”

The blimps’ circular shape makes them harder to steer with manual controllers, but allows them to turn and quickly change direction. This is unlike the more popular zeppelin-shaped blimps that other researchers commonly use.

Zhang has filed a request with Guinness World Records for the smallest autonomous blimp. He sees a future where blimps can play a role in people’s lives, but only if roboticists can determine what people want and how they’ll react to a flying companion.

“Imagine a blimp greeting you at the front of the hardware store, ready to offer assistance,” Zhang says. “People are good at reading people’s faces and sensing if they need help or not. Robots could do the same. And if you needed help, the blimp could ask, then lead you to the correct aisle, flying above the crowds and out of the way.”

Members of the teams will present both at the 2017 IEEE International Conference on Robotics and Automation (ICRA) May 29-June 3 in Singapore.

Source: Georgia Tech