Researchers have devised a new way to automatically and efficiently gather data about a plant’s phenotype: the physical traits that emerge from its genetic code.

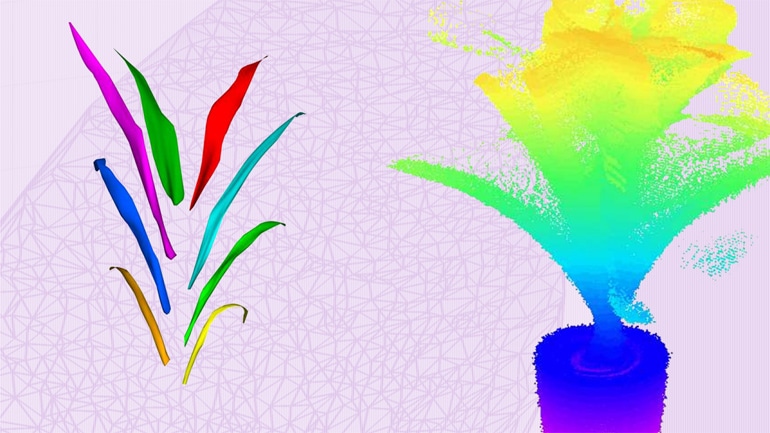

Each rendering and its associated data come courtesy of LiDAR, a technology that fires pulsed laser light at a surface and measures the time it takes for those pulses to reflect back—the greater the delay, the greater the distance. By scanning a plant throughout its rotation, this 360-degree LiDAR technique can collect millions of 3D coordinates that a sophisticated algorithm then clusters and digitally molds into the components of the plant: leaves, stalks, ears.

The digital 3D images are in the palette of Dr. Seuss: magenta, teal, and yellow, each leaf rendered in a different hue but nearly identical to its actual counterpart in shape, size, and angle.

The faster and more accurately that researchers can collect phenotypic data, the more easily they can compare crops that have been bred or genetically engineered for specific traits—ideally those that help produce more food.

Accelerating that effort is especially important, say the researchers, to meet the food demands of a global population expected to grow from about 7.5 billion people today to nearly 10 billion in 2050.

“We can already do DNA sequencing and genomic research very rapidly,” says Yufeng Ge, assistant professor of biological systems engineering at the University of Nebraska-Lincoln. “To use that information more effectively, you have to pair it with phenotyping data. That will allow you to go back and investigate the genetic information more closely. But that is now (reaching) a bottleneck, because we can’t do that as fast as we want at a low cost.”

At three minutes per plant, the team’s set-up operates substantially faster than most other phenotyping techniques, Ge says. But speed matters little without accuracy, so the team also used the system to estimate four traits of corn and sorghum plants.

The first two traits—the surface area of individual leaves and of all leaves on a plant—help determine how much energy-producing photosynthesis the plant can perform. The other two—the angle at which leaves protrude from a stalk and how much those angles vary within a plant—influence both photosynthesis and how densely a crop can be planted in a field.

Comparing the system’s estimates with careful measurements of the corn and sorghum plants revealed promising results: 91 percent agreement on the surface area of individual leaves and 95 percent on total leaf area. The accuracy of angular estimates was generally lower but still ranged from 72 percent to 90 percent, depending on the variable and type of plant.

To date, the most common form of 3D phenotyping has relied on stereo-vision: two cameras that simultaneously capture images of a plant and merge their perspectives into an approximation of 3D by identifying the same points from both images.

Though imaging has revolutionized phenotyping in many ways, it does have shortcomings. The shortest, Ge says, is an inevitable loss of spatial information during the translation from 3D to 2D, especially when one part of a plant blocks a camera’s view of another part.

“It has been particularly challenging for traits like leaf area and leaf angle, because the image does not preserve those traits very well,” Ge says.

The 360-degree LiDAR approach contends with fewer of those issues, the researchers say, and demands fewer computational resources when constructing a 3D image from its data.

“LiDAR is advantageous in terms of the throughput and speed and in terms of accuracy and resolution,” says Suresh Thapa, a doctoral student in biological systems engineering. “And it’s becoming more economical (than before).”

Genetic ‘control system’ beefs up crop defenses

Going forward, the team wants to introduce lasers of different colors to its LiDAR set-up. The way a plant reflects those additional lasers will help indicate how it uptakes water and nitrogen—the essentials of plant growth—and produces the chlorophyll necessary for photosynthesis.

“If we can tackle those three (variables) on the chemical side and these other four (variables) on the morphological side, and then combine them, we’ll have seven properties that we can measure simultaneously,” Ge says. “Then I will be really happy.”

The researchers report their work in the journal Sensors. The team received support from the National Science Foundation.

Source: University of Nebraska-Lincoln